Since inception, part of the core characteristics of Kubernetes have been its outstanding orchestration and scheduling capabilities. Kubernetes considers various parameters and application requirements in its algorithm – such as CPU and memory – before placing pods accordingly.

Kubernetes can also enforce and limit how many resources a pod might use. By nature, resources available to the pods are shared resources and preventing compute starvation is critical. But if we are capable of preventing compute starvation, shouldn’t we also prevent network starvation?

Organizations are becoming more efficient at running more pods per node, to take full advantage of available resources. Consequently, it’s important to consider the impact bandwidth consumption can have on overall pod performance.

After all, if a pod began downloading terabytes of traffic at a huge rate, it could cause bandwidth exhaustion and affect neighbors. Currently, Kubernetes has limitations preventing such a scenario. But now it is possible with the Cilium Bandwidth Manager.

In this post we will discuss Cilium Bandwidth Manager, starting with a review of how rate-limiting is done in Kubernetes traditionally and some of its constraints. We will then look at how Cilium addresses some of these limitations before looking at a practical implementation of Cilium Bandwidth Manager.

Note: This blog post is part of a series on Bandwidth Management with Cilium. In the second part, we will dive into a revolutionary TCP congestion technology called BBR and how Cilium became the first cloud native platform to support it (with the help of eBPF of course!). If you’re interested in offering latency and throughput improvements while controlling pod network contention (and really – who isn’t?), then this series is for you.

Congestion Handling

In the hardware networking world, we have had the ability to impose some limits on traffic consumption for a very long time. In simple terms, network engineers would specify a limit on a physical interface and when traffic exceeded that limit, the router would queue up and drop packets. TCP then adjusted the throughput through TCP congestion protocols. (Note: I am intentionally keeping it simple for brevity but if you want to truly understand TCP Congestion Control, please read the TCP Congestion Control e-book).

Imposing similar limits for pods is not as straightforward but is necessary to avoid a potential “noisy neighbor” scenario where network-hungry pods hog the bandwidth. Kubernetes has provided support for traffic shaping through a bandwidth CNI plugin. An operator would apply pod annotations like the one below and the CNI plugin would rate-limit the pod’s ingress and egress traffic.

Unfortunately, this feature is not only still experimental, but the plugin has severe limitations and does not really scale for production use cases.

In this KubeCon 2022 session presented by my Isovalent teammates Daniel Borkmann and Christopher M. Luciano, the CNI plugin’s limitations are explained in more detail.

To summarize the key challenges with the existing CNI bandwidth plugin implementation:

- On the ingress side (controlling traffic entering the pod), the CNI bandwidth plugin implements queueing at the virtual ethernet (veth) – traffic has already entered the host and packets are being queued and waiting for the qdisc (qdisc stands for Queueing Discipline and is essentially the Linux traffic control scheduler) to be processed through an algorithm called Token Bucket Filter (TBF) before it can enter the pod.

Tokens roughly correspond to bytes. Initially, the TBF is stocked with tokens which correspond to the amount of traffic that can be burst in one go. Tokens arrive at a steady rate, until the bucket is full.

If no tokens are available, packets are queued, up to a limit. The TBF now calculates the token deficit, and throttles until the first packet in the queue can be sent.

The consequence is with TBF is that it can, by holding on to packets until there are enough tokens, increase delay.

This type of algorithm is also not built for multi-core and multi-queue scenarios.

While the host’s physical NIC is probably multi-queue capable (as most modern NICs are), the fact that the traffic will eventually hit the Token Bucket queue hinders the multi-queue performance gains. It’s a limitation described as a single lock contention point.A multi-queue NIC leverages multi-CPU cores to handle traffic and balance packets across multiple queues, which can improve network performance significantly.

- On the egress side (controlling traffic leaving the pod), again the CNI plugin has to work around limitations on how traffic can be shaped with TBF. As Linux can only implement traffic shaping in egress, the traffic is redirected to an Intermediate Functional Block (IFB) in order for the IFB to apply traffic shaping.

The consequence is that a hop is artificially inserted in the network path in order to implement shaping. And of course, the more hops you add in a network, the greater the latency is.

In summary, if we were to use the existing CNI plugin to limit pod bandwidth, we would:

- increase resource consumption on the hosts,

- sacrifice network performance gains from modern NICs, and

- add hops and latency to the mix.

This is counterproductive.

The goal is therefore to implement bandwidth management but without the aforementioned limitations.

The pathway to this goal came with an Internet Hall of Fame inductee’s help.

Introducing the Timing Wheels

Van Jacobson is considered a legend in the networking world.

Not only did he co-write some of the core network diagnostic tools (like traceroute and the BPF-based tcpdump), he also contributed to major improvements around TCP. The Congestion Avoidance and Control paper essentially led to the development of TCP Congestion and to the reliability of today’s Internet.

Today’s TCP Congestion leverages queues to handle shaping but at the Netdev 0x12 keynote, Van proposed that timing wheels would be a more effective mechanism to handle congestion than queues.

The proposal suggests putting an Earliest Departure Time (EDT) timestamp on each packet (depending on the policy and rate) and sending out packets based on the timestamp.

For our context around rate-limiting, it signifies that the packet would not be sent earlier than their timestamp dictates, therefore slowing down the network flow.

Compared to traditional queueing models, this mechanism provides traffic performance benefits (reducing delay and avoiding unnecessary packet drops) while having a minimal CPU impact on the host.

This is why Cilium implemented Bandwidth Management with EDT.

Cilium’s Bandwidth Manager Implementation

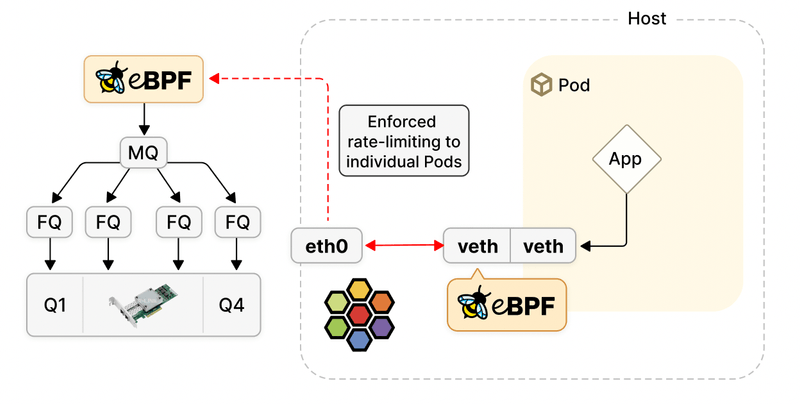

Cilium leverages some of the technologies mentioned throughout the post to impose rate-limiting:

- Cilium agent monitors the pod annotations. Operators can configure their bandwidth limits with the same annotations used by the CNI plugin (

kubernetes.io/egress-bandwidth). - Cilium agent pushes the bandwidth requirements into eBPF datapath.

- Enforcement happens on physical interfaces instead of the veth which avoids bufferbloat and improves TCP TSQ handling.

- Cilium agent implements the EDT timestamps based on the user-provided bandwidth policy

- Cilium is multi-queue aware and automatically sets up multi-queue qdiscs with fair queue leaf qdiscs (in other words, traffic arrives at a main queue (root) and is classified into several sub queues (leaf)).

- The fair queues implement the timing wheel mechanism and distribute the traffic according to the packet timestamp.

In summary:

- Cilium removes the need for an IFB, therefore reducing the latency that was introduced with the CNI plugin implementation

- Cilium leverages multi-core and multi queue capabilities, ensuring rate-limiting is not detrimental to performances

- Cilium leverages state-of-the-art and optimal congestion avoidance technologies like Earliest Departure Time and Timing Wheel to reduce latency.

Now – platform operators don’t always care how it’s done – they want to know 1) if it works and 2) if it’s easy to operate.

From a performance perspective, we found tremendous performance gains in our testing. During the latency tests, we saw a 4x reduction of p99 latency (p99 is the worst latency that was observed by 99% of all requests – it is the maximum value if you ignore the top 1%).

From an operational perspective, platform engineers will find it’s extremely easy to set up – it’s literally one line of YAML.

Time for some practice!

Let’s look at how it works in the lab. You can watch this demo below or if you prefer reading or doing it yourself, see the steps below:

Bandwidth Manager is not enabled by default so, when installing Cilium, make sure to set up the right Helm flag. For this lab, I am using a GKE cluster. I am primarily following the official Cilium docs.

First, we’re deploying a GKE cluster, using the gcloud cli:

Next, we are installing Cilium with Helm, using the -set bandwidthManager=true flag to make sure the feature is enabled (it’s disabled by default). Alternatively, we could have installed cilium with the cilium-cli command cilium install.

After doing a rollout restart of the Cilium DaemonSet and checking that the Cilium pods are running, we also check with “kubectl -n kube-system exec ds/cilium -- cilium status | grep BandwidthManager” that the feature was implemented correctly.

During our tests, we’ll run a network performance test between two pods (server/client).

The YAML below includes the specs for both pods. Note the "kubernetes.io/egress-bandwidth: "10M" is how we specify the bandwidth requirements and that we are using anti-affinity so that the two pods are placed in different nodes.

Let’s deploy the pods and start running network throughput tests. You can see below we are doing a TCP_MAERTS network performance test.

What is MAERTS you may be wondering?

A typical netperf test is called TCP_STREAM and goes from the netperf client to the netperf server. Therefore a stream from the netperf server to the client will be STREAM backwards – MAERTS. This is how servers typically operate, with larger data flows going from server to client.

The results? 9.54 Mbps for a specified 10Mbps limit.

When I increase the max bandwidth on the pod manifest from 10Mbps to 100Mbps, re-apply it and re-run the speed test, I get the increased throughput I expect (95 Mbps):

Summary

To wrap-up, pod network rate-limiting is an appealing feature, and Cilium implements it in a very innovative manner. As we discussed, it is easy to set up using only one line of YAML.

It highlighted another great use case for eBPF – Linux gives you a built-in shaper (TBF) but there is a better idea out there (timing wheels) and rather than wait for a new kernel version, eBPF lets you get the new function into the kernel as eBPF code.

And while we have so far focused on traffic entering and exiting Kubernetes pods, eBPF also gives us an opportunity to improve broader Internet-level bandwidth management.

We will explore that in the next blog post. Meanwhile, you can read more about some of the topics highlighted in the post (see links below), talk to the experts behind eBPF and Cilium (book your session), or you can head over to isovalent.com/labs to play with Cilium.

Learn More

Cilium and eBPF Resources:

Isovalent Resources:

Technical Resources:

- KubeCon Session and Slides: Better Bandwidth Management with eBPF

- Official Cilium Docs on Bandwidth Manager: Cilium Docs on Bandwidth Manager

- An eBook on TCP Congestion Control: TCP Congestion Control: A Systems Approach

- Why Google decided to replace HTB with EDT and BPF (Slides and Video) Netdev 0x14 – Replacing HTB with EDT and BPF

- A detailed white paper on EDT (by Google): Carousel: Scalable Traffic Shaping at End Hosts

Prior to joining Isovalent, Nico worked in many different roles—operations and support, design and architecture, and technical pre-sales—at companies such as HashiCorp, VMware, and Cisco.

In his current role, Nico focuses primarily on creating content to make networking a more approachable field and regularly speaks at events like KubeCon, VMworld, and Cisco Live.