Enabling Multicast securely with IPsec in the cloud native landscape with Cilium

IP multicast is a bandwidth-conserving technology that reduces traffic by simultaneously delivering a single stream of information to potentially thousands of corporate recipients and homes. Multicast has found its use case with traditional networks for a long time, including power systems, emergency response, and audio dispatch systems, to newer uses by the finance, media, and broadcasting worlds. Despite the evident efficiency benefits provided by multicast, the technology was never natively supported by cloud providers. Cloud migration projects halt when this roadblock is discovered, spending time and money to create expensive workarounds. Developers don’t realize multicast in the cloud is available, assuming it remains a dream. This blog post will walk you through enabling Multicast in the cloud with Isovalent Enterprise for Cilium and enabling traffic encryption between pods across nodes with IPsec.

What is Multicast?

Traditional IP communication allows a host to send packets to a single host (unicast transmission) or all hosts (broadcast transmission). IP multicast provides a third possibility: sending a message from a single source to selected multiple destinations across a Layer 3 network in one data stream. You can read more about the evolution of multicast in a featured blog covered by my colleague Nico Vibert.

Multicast relies on a standard called IGMP (Internet Group Management Protocol), which allows many applications, or multicast groups, to share a single IP address and receive the same data simultaneously. (For instance, IGMP is used in gaming when multiple gamers use the network simultaneously to play together.)

How does multicast work?

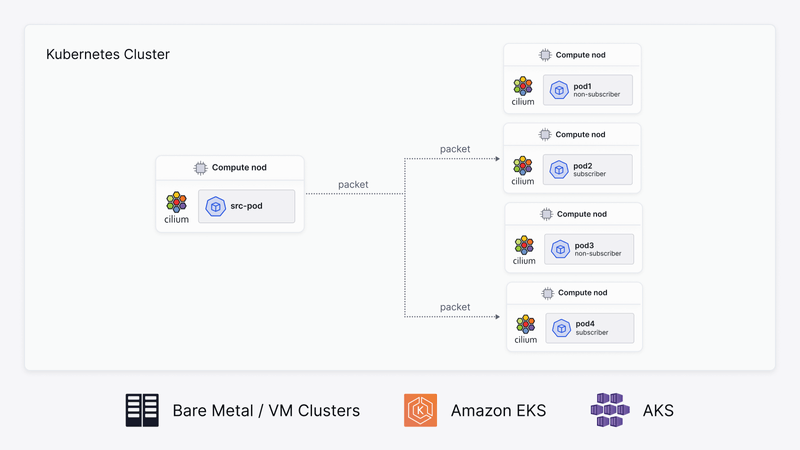

To join the multicast group, an application subscribes to the data via an IGMP join. Whenever a packet from that multicast group comes to the receiver, it copies and sends it to them. Simultaneously, it sends the copy to the other, say, N members or endpoints that are also part of the multicast group. If the endpoints aren’t interested in those packets, they leave the group using an IGMP leave message.

What IP address schema does multicast use?

IGMP uses IP addresses set aside for multicasting. Multicast IP addresses are in the range between 224.0.0.0 and 239.255.255.255. Each multicast group shares one of these IP addresses. When a receiver receives packets directed at the shared IP address, it duplicates them, sending copies to all multicast group members.

IGMP multicast groups can change at any time. A device can send an IGMP “join group” or “leave group” message anytime.

The need for Multicast- Can it aid financial services?

Financial services have multiple dependencies like trading, pricing, and exchange services, which have multiple endpoints that need to get data simultaneously, and thus, multicast comes to the aid here. These endpoints could also be producers of data (pushing out multicast data to other consumers) or the consumers of the data (receiving data from other producers). This increases complexity in a cloud native landscape, which we will cover in this blog to entangle this complexity in a simple, subtle, and yet intuitive way.

What is Isovalent Enterprise for Cilium?

“Cilium is the next generation for container networking.”

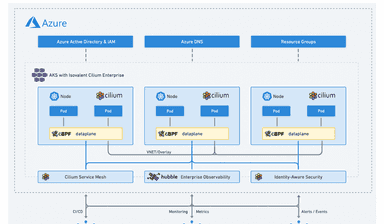

Isovalent Cilium Enterprise is an enterprise-grade, hardened distribution of open-source projects Cilium, Hubble, and Tetragon, built and supported by the Cilium creators. Cilium enhances networking and security at the network layer, while Hubble ensures thorough network observability and tracing. Tetragon ties it all together with runtime enforcement and security observability, offering a well-rounded solution for connectivity, compliance, multi-cloud, and security concerns.

Why Isovalent Enterprise for Cilium?

For enterprise customers requiring support and usage of Advanced Networking, Security, and Observability features, “Isovalent Enterprise for Cilium” is recommended with the following benefits:

- Advanced network policy: Isovalent Cilium Enterprise provides advanced network policy capabilities, including DNS-aware policy, L7 policy, and deny policy, enabling fine-grained control over network traffic for micro-segmentation and improved security.

- Hubble flow observability + User Interface: Isovalent Cilium Enterprise Hubble observability feature provides real-time network traffic flow, policy visualization, and a powerful User Interface for easy troubleshooting and network management.

- Multi-cluster connectivity via Cluster Mesh: Isovalent Cilium Enterprise provides seamless networking and security across multiple clouds, including public cloud providers like AWS, Azure, and Google Cloud Platform, as well as on-premises environments.

- Advanced Security Capabilities via Tetragon: Tetragon provides advanced security capabilities such as protocol enforcement, IP and port whitelisting, and automatic application-aware policy generation to protect against the most sophisticated threats. Built on eBPF, Tetragon can easily scale to meet the needs of the most demanding cloud-native environments.

- Service Mesh: Isovalent Cilium Enterprise provides sidecar-free, seamless service-to-service communication and advanced load balancing, making deploying and managing complex microservices architectures easy.

- Enterprise-grade support: Isovalent Cilium Enterprise includes enterprise-grade support from Isovalent’s experienced team of experts, ensuring that any issues are resolved promptly and efficiently. Additionally, professional services help organizations deploy and manage Cilium in production environments.

Why Multicast on Isovalent Enterprise for Cilium?

- Kubernetes CRDs is a powerful extension to the Kubernetes API that expands Kubernetes beyond its core resource types. CRDs allow administrators to introduce new, application-specific resources into Kubernetes clusters, tailoring the platform to unique requirements. Multicast is enabled in Isovalent Enterprise for Cilium using CRDs.

- The Multicast datapath is fully available for both Cilium OSS and Isovalent Enterprise for Cilium users, with the enterprise offering a CRD-based control plane to configure and manage multicast groups easily.

Good to know before you start

- The maximum number of multicast groups supported is 1024, and the maximum number of subscribers per group on each node is 1024.

- Cilium supports both IGMPv2 and IGMPv3 join and leave messages.

- Current limitations with Multicast running on a Kubernetes cluster with Cilium:

- This feature works in tunnel routing mode and is currently restricted to the VxLAN tunnel.

- Only IPv4 multicast is supported.

- Only IPsec transparent encryption is supported.

Pre-Requisites

The following prerequisites must be considered before you proceed with this tutorial.

- An up-and-running Kubernetes cluster. If you don’t have one, you can create a cluster using one of these options:

- The following dependencies should be installed:

- Install Azure CLI.

- You should have an Azure Subscription.

- Install kubectl.

- Install Cilium CLI.

- Install Helm.

- Ensure that the kernel version on the Kubernetes nodes is >= 5.13

How can you achieve Multicast functionality with Cilium?

Create Kubernetes Cluster(s)

You can enable multicast on any Kubernetes cluster distribution as it applies to your use case. These clusters can be created from your local machine or a VM in the respective resource group/VPC/VNet in the respective cloud distribution. In this tutorial, we will be enabling Multicast on the following distribution:

- Azure Kubernetes Service (AKS)

Create an AKS cluster with BYOCNI in dual-stack mode.

Set the subscription

Choose the subscription you want to use if you have multiple Azure subscriptions.

- Replace

SubscriptionNamewith your subscription name. - You can also use your subscription ID instead of your subscription name.

AKS Resource Group Creation

Create a Resource Group

AKS Cluster creation

Pass the --network-plugin parameter with the parameter value of none.

Set the Kubernetes Context

Log in to the Azure portal, browse Kubernetes Services>, select the respective Kubernetes service created (AKS Cluster), and click connect. This will help you connect to your AKS cluster and set the respective Kubernetes context.

Cluster status check

Check the status of the nodes and make sure they are in a “Ready” state.

Install Isovalent Enteprise for Cilium

- Users can contact their partner Sales/SE representative(s) at sales@isovalent.com to access the requisite documentation and how to install Isovalent Enteprpise for Cilium on an AKS cluster with BYOCNI as the network plugin.

Validate Cilium version

Check the version of cilium with cilium version:

Cilium Health Check

cilium-health is a tool available in Cilium that provides visibility into the overall health of the cluster’s networking connectivity. You can check node-to-node health with cilium-health status:

How can we test Multicast with Cilium?

We will go over creating some basic application(s) and manifests.

Configuration

- Before pods can join multicast groups,

IsovalentMulticastGroupResources must be configured to define which groups are enabled in the cluster. - For this tutorial, groups

255.0.0.11,255.0.0.12,255.0.0.13are enabled in the cluster. Pods can then start joining and sending traffic to these groups.

- Apply the Custom Resource.

- Check if the Custom Resource has been applied.

Create sample deployment(s)

- In this tutorial, we will create four pods on a three-node Kubernetes cluster, which will participate in joining the multicast group and sending multicast packets. Pod deployment is done using the following:

- Deploy the Deployment using:

- Verify the status of the deployment.

Multicast Group Validation

- Validate the configured

IsovalentMulticastGroupResource and inspect its contents:

- Each cilium agent pod contains the CLI cilium-dbg, which can be used to inspect multicast BPF maps. This command will list all the groups configured on the node.

Add multicast subscribers

- Pods that want to join multicast groups must send out IGMP join messages for specific group addresses. In this tutorial, pods

netshoot-665b547d78-kgqnc,netshoot-665b547d78-wqhvh&netshoot-665b547d78-zwhvhjoin multicast group225.0.0.11. - Using

socat; which receives multicast packets addressed to 225.0.0.11 and forks a child process for each.- The child processes may each send one or more reply packets to the particular sender. Run this command on several hosts, and they will all respond in parallel.

Validate multicast subscribers

- You can validate that subscribers are tracked in BPF maps using the command,

cilium-dbg bpf multicast subscriber list all. Note that the subscriber list command displays information from the perspective of a given node.

- In this output, one local pod subscribes to a multicast group, and two other nodes have pods joining the group 225.0.0.11.

Generating Multicast Traffic

- Multicast Traffic can be generated from one of the Netshoot pods; all subscribers should receive it.

- When Multicast Traffic is sent from

netshoot-665b547d78-kgqncPod:

- Multicast Traffic is received by all three pods.

How can I verify Multicast Traffic?

- One common question is how to verify traffic for a protocol type you set up the testbed for. In this case, for multicast, you can verify

IGMP joinmessage being sent from the netshoot-* pods. - tcpdump needs to be installed on the netshoot pods via

apt-get install tcpdump

How can I observe Multicast Traffic?

In an enterprise environment, the key is to ensure that the intended traffic is closely examined and anomalies, if detected, are worked upon. To observe Multicast Traffic, you can install Isovalent Enterprise for Tetragon and look at the respective metrics provided by ServiceMonitor to Grafana.

What is Tetragon?

Tetragon provides powerful security observability and a real-time runtime enforcement platform. The creators of Cilium have built Tetragon and brought the full power of eBPF to the security world.

Tetragon helps platform and security teams solve the following:

Security Observability:

- Observing application and system behavior such as process, syscall, file, and network activity

- Tracing namespace, privilege, and capability escalations

- File integrity monitoring

Runtime Enforcement:

- Application of security policies to limit the privileges of applications and processes on a system (system calls, file access, network, kprobes)

How can I install Isovalent Enterprise for Tetragon?

To obtain the helm values to install Isovalent Enterprise for Tetragon and access to Enterprise documentation, reach out to our sales team and support@isovalent.com

Integrate Prometheus & Grafana with Tetragon

- Install Prometheus and Grafana.

- To access the Enterprise documentation for integrating Tetragon with Prometheus and Grafana, contact our sales team and support@isovalent.com.

- Integrated multicast and UDP socket dashboards can be obtained by contacting our sales team and support@isovalent.com.

- Apply the UDP & Interface parser tracing policies from the above links.

- Enabling the UDP sensor provides eBPF observability for the following features:

- UDP TX/RX Traffic

- UDP TX/RX Segments

- UDP TX/RX Bursts

- UDP Latency Histogram

- UDP Multicast TX/RX Traffic

- UDP Multicast TX/RX Segments

- UDP Multicast TX/RX Bursts

- The UDP parser provides UDP state visibility into your Kubernetes cluster for your microservices.

- We can observe the network metrics with Tetragon and trace the pod binary/process originating.

- Enabling the UDP sensor provides eBPF observability for the following features:

- To access the Grafana dashboard, forward the traffic to your local machine or a respective machine from where access is available.

- Grafana can also be accessed via a service of the type

LoadBalancer.

- Grafana can also be accessed via a service of the type

- Log in to the Grafana dashboard with the requisite credentials, browse to dashboards, and click on the dashboard named “Tetragon/ UDP Throughput/ Socket.”

- Visualize metrics for a specific multicast group.

How do I check Tetragon values and metrics if I don’t have access to Grafana?

- You can also look at the raw stats by doing a simple curl to the Tetragon service. Look for the raw stats and then export them to your chosen SIEM. As an example:

- In the scenario above, the Tetragon service is running on the following:

- Login to one of the workloads from where the Tetragon service is accessible:

Can I encrypt Multicast Traffic?

While developing this feature, some customers asked us to encrypt Multicast Traffic. We are happy to announce that you can use IPsec transparent encryption to enable traffic encryption between pods across nodes.

Configuration

- Sysctl– The following sysctl must be configured on the nodes to allow encrypted Multicast Traffic to be forwarded correctly:

- IPsec secret– A Kubernetes secret should consist of one key-value pair where the key is the file’s name to be mounted as a volume in cilium-agent pods. Configure an IPsec secret using the following command:

- The secret can be listed by

kubectl-n kube-system get secretsand will be listed ascilium-ipsec-keys.

- In addition to the IPsec configuration that encrypts unicast traffic between pod to remote node pods, you can set the

enable-ipsec-encrypted-overlayconfig option totruefor encrypting Multicast Traffic between pod to remote node pods.

How can I install/upgrade my cluster with Multicast and IPsec?

You can either upgrade your existing cluster running Multicast with IPsec or create a greenfield cluster with Multicast + IPsec.

To obtain the helm values to install IPsec with Multicast and access to Enterprise documentation, reach out to our sales team and support@isovalent.com

How can I validate if the cluster has been enabled for encryption?

You can ensure that IPsec has been enabled as the encryption type by:

Create sample application(s)

You can create a client and server application that are pinned on two distinct Kubernetes nodes to test node-to-node IPsec encryption by using the following manifests:

- Apply the client-side manifest. The client does a “wget” towards the server every 2 seconds.

- Apply the server-side manifest.

How can I check that the traffic is encrypted and sent over the VxLAN tunnel?

- Log in to the node via a shell session and install tcpdump on the nodes where the server pod has been deployed.

- Check the status of the deployed pods.

- With the client continuously sending traffic to the server, we can observe node-to-node and pod-to-pod traffic being encrypted and sent over distinct interfaces.

eth0andcilium_vxlan.

Conclusion

Hopefully, this post gave you a good overview of enabling Multicast in the cloud with Isovlaent Enterprise for Cilium and using IPsec transparent encryption to enable traffic encryption between pods across nodes. You can schedule a demo with our experts if you’d like to learn more.

Try it out

Start with the Multicast lab and see how to enable multicast in your enterprise environment.

Amit Gupta is a senior technical marketing engineer at Isovalent, powering eBPF cloud-native networking and security. Amit has 21+ years of experience in Networking, Telecommunications, Cloud, Security, and Open-Source. He has previously worked with Motorola, Juniper, Avi Networks (acquired by VMware), and Prosimo. He is keen to learn and try out new technologies that aid in solving day-to-day problems for operators and customers.

He has worked in the Indian start-up ecosystem for a long time and helps new folks in that area outside of work. Amit is an avid runner and cyclist and also spends considerable time helping kids in orphanages.