Cilium 1.15 – Gateway API 1.0 Support, Cluster Mesh Scale Increase, Security Optimizations and more!

After over 2,700 commits contributed by an ever-growing community of nearly 700 contributors, Cilium 1.15 is now officially released! Since the previous major release 6 months ago, we’ve celebrated a lot of Cilium milestones. Cilium graduated as a CNCF project, marking it as the de facto Kubernetes CNI. The documentary that narrated the conception of eBPF and Cilium was launched. The first CNCF Cilium certification was announced.

But the Cilium developers and community didn’t stay idle: Cilium 1.15 is packed with new functionalities and improvements. Let’s review the major advancements in this open source release:

Cilium 1.15 now supports Gateway API 1.0. The next generation of Ingress is built in Cilium, meaning you don’t need extra tools to route and load-balance incoming traffic into your cluster. Cilium Gateway API now supports GRPCRoute for gRPC-based applications, alongside additional new features such as the ability to redirect, rewrite and mirror HTTP traffic.

Cilium 1.15 includes a ton of security improvements: BGP peering sessions now support MD5-based authentication and Envoy – used for Layer 7 processing and observability by Cilium – has seen its security posture strengthened. In addition, with Hubble Redact, you can remove any sensitive data from the flows collected by Hubble.

Cluster Mesh operators will also see some major improvements: you can now double the number of meshed clusters! The introduction of KVStoreMesh in Cilium 1.14 paved the way for greater scalability – in Cilium 1.15, you can now mesh up to 511 clusters together.

Kubernetes users will also benefit from Hubble‘s new observability options – you can now correlate traffic to a Network Policy, export Hubble flows to a file for later consumption as logs and identify a specific flow by using some of the new Hubble filters such as flows coming from a specific cluster or HTTP flows based on their URL or header values.

Talking of observability – we’ve not even mentioned the lightweight eBPF security observability tool Cilium Tetragon yet. It’s intentional – Tetragon has grown so much we’ll save all the new features in an upcoming blog post! Cilium Tetragon 1.0 was released in October and the momentum behind the low overhead, high performance cloud native runtime security tool is remarkable.

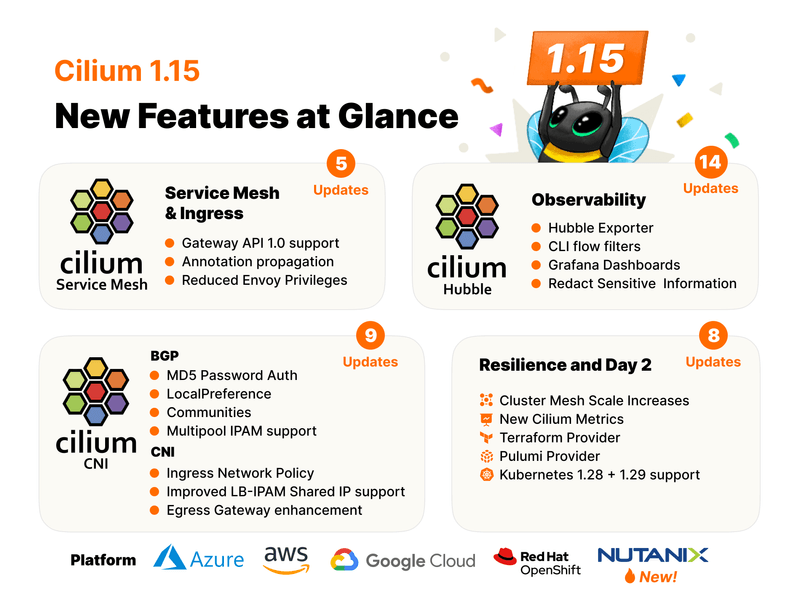

Cilium 1.15 – New Features at a Glance

The latest open source release of Cilium includes all these new features and improvements:

Service Mesh & Ingress/Gateway API

- Gateway API 1.0 Support: Cilium now supports Gateway API 1.0 (more details)

- Gateway API gRPC Support: Cilium can now route gRPC traffic, using the Gateway API (more details)

- Annotation Propagation from GW to LB: Both the Cilium Ingress and Gateway API can propagate annotations to the Kubernetes LoadBalancer Service (more details)

- Reduced Envoy Privileges: Envoy’s security posture has been reinforced (more details)

- Ingress Network Policy: Enforce ingress network policies for traffic inbound via Ingress + GatewayAPI (more details)

Networking

- BGP Security: Support for MD5-based session authentication has landed (more details)

- BGP Traffic Engineering: New BGP attributes (communities and local preference) are now supported (more details)

- BGP Operational Tooling: Track BGP peering sessions and routes from the CLI (more details)

- BGP Support for Multi-Pool IPAM: Cilium can now advertise PodIPPools, used by the most flexible IPAM method (more details)

Day 2 Operations and Scale

- Cluster Mesh Twofold Scale Increase: Cluster Mesh now supports 511 meshed clusters (more details)

- Cilium Agent Health Check Observability: Enhanced health check data for Cilium Agent sub-system states (more details)

- Terraform/OpenTofu & Pulumi Cilium Provider: You can now deploy Cilium using your favourite Infra-As-Code tool (more details)

- Kubernetes 1.28 and 1.29 support: The latest Kubernetes releases are now supported with Cilium (more details)

Hubble & Observability

- New Grafana Dashboards: Cilium 1.15 includes two new network and DNS Grafana dashboards (more details)

- Hubble Flows to a Network Policy Correlation : Use Hubble to understand which network policies is permitting traffic, helping you know if they are having the intended effect on application communications (more details)

- Hubble Flow Exporter: Export Hubble flows to a file for later consumption as logs (more details)

- New Hubble CLI filters: Identify a specific flow by using some of the new Hubble filters such as flows coming from a specific cluster or HTTP flows based on their URL or header values (more details)

- Hubble Redact: Remove sensitive information from Hubble output (more details)

Service Mesh & Ingress/Gateway API

Gateway API 1.0

Gateway API support – the long-term replacement to the Ingress API – was first introduced in Cilium 1.13 and has become widely adopted. Many Cilium users can now route and load-balance incoming traffic into their cluster without having to install a third party ingress controller or a dedicated service mesh.

Cilium 1.15’s implementation of Gateway API is fully compliant with the 1.0 version and supports, amongst other things, the following use cases:

- HTTP routing

- HTTP traffic splitting and load-balancing

- HTTP request and response header rewrite

- HTTP redirect and path rewrites

- HTTP mirroring

- Cross-namespace routing

- TLS termination and passthrough

- gRPC routing

Learning Gateway API

All these features can be tested and validated in our popular free online labs, which have all been updated to Cilium 1.15.

Gateway API Lab

In this lab, learn about the common Gateway API use cases: HTTP routing, HTTP traffic splitting and load-balancing and TLS termination and passthrough!

Start Gateway API LabAdvanced Gateway API Lab

In this lab, learn about advanced Gateway API use cases such as: HTTP request and response header rewrite, HTTP redirect and path rewrites HTTP mirroring and gRPC routing!

Start Advanced Gateway API labTo learn more about the Gateway API, the origins of the project and how it is supported in Cilium, read the following blog post:

To learn more about how to use redirect, rewrite and mirror traffic with the Gateway API, check out this new tutorial:

Tutorial: Redirect, Rewrite and Mirror HTTP with Cilium Gateway API

How to manipulate and alter HTTP with Cilium

Read Blog PostLet’s dive into the latest feature – gRPC Routing.

gRPC Routing

gRPC, the high-performance streaming protocol, is now supported by Cilium Gateway API. Most modern applications leverage gRPC for bi-directional data streaming across micro-services.

With Cilium 1.15, you can now route gRPC traffic based on gRPC services and methods to specific backends.

The GRPCRoute resource allows you to match gRPC traffic on host, header, service and method fields and forwards it to different Kubernetes Services.

Note that, at time of writing, GRPCRoute remains in the “Experimental” channel of Gateway API. For more information, check the Gateway API docs.

Annotation Propagation from the Gateway to the Kubernetes LoadBalancer Service

The Cilium Gateway API now supports a new Infrastructure field that lets you specify labels or annotations that will be copied from the Gateway to the resources created, such as cloud load balancers.

It’s extremely useful in managed cloud environments like AKS or EKS when you want to customize the behaviour of the load balancers. It can help us control service IP addresses (via LB-IPAM annotations) and BGP/ARP announcements (via labels).

In the demo below, once we add the hello-world label to the Gateway’s Infrastructure field and deploy the manifest, a LoadBalancer Service is created as expected, with the hello-world label.

Security Enhancements for Envoy

The Cilium project is always looking to improve the security of Cilium itself, beyond just providing security features for end users.

Looking back at recent security enhancements, Cilium 1.13 brought container image signing using cosign, software bill of materials (SBOM) for each image, and Server Name Indication (SNI) for TLS.

Cilium 1.15 introduces a notable security improvement related to Envoy permissions at L7, significantly reducing the scope of capabilities allowed by Envoy processes.

The Envoy proxy is used within Cilium whenever Layer 7 processing is required – that includes use cases such as Ingress/Gateway API, L7-aware network policies and visibility. In earlier versions of Cilium, Envoy would be deployed as a separate process within the Cilium agent pod.

However the design had a couple of shortcomings – 1) both the Cilium agent and the Envoy proxy not only shared the same lifecycle but also the same blast radius in the event of a compromise, and 2) Envoy would run as root.

Cilium 1.14 introduced the option to run Envoy as a DaemonSet. This support decoupled the Envoy proxy from sharing the same life cycle of the Cilium agent, and allows users to configure different resources and log access for each. This will become the default option in Cilium 1.16.

This reduced the blast radius in the unlikely event of a compromise.

Cilium 1.15 goes one step further: the process that is handling HTTP traffic no longer has privileges to access BPF maps or socket options directly. Meaning the blast radius of a potentially compromised Envoy proxy is greatly reduced, with this update restricting previous permissions running as root.

Ingress Network Policy

By design, prior to Cilium 1.15, services exposed via Cilium Ingress would bypass CiliumClusterwideNetworkPolicy rules. At a technical level, this is because Envoy terminates the original TCP connection and forwards HTTP traffic to backends sourced from itself. At the point where Cilium Network Policy is enforced (tc ingress of the lxc interface) the original source IP is no longer present, so matching against a network policy can not be performed.

Simply put, you couldn’t apply a network rule to inbound traffic to your cluster that would transit Ingress.

This wasn’t suitable for all situations, and users needed the additional security control to be able to manage the traffic coming into their exposed services.

In Cilium 1.15, this behaviour has been updated to ensure that that the original source identity is maintained to allow the enforcing of policies against ingress traffic. Now both ingress and egress policies defined for the ingress identity are enforced when configured with the new enforce_policy_on_l7lb option.

To configure an ingress policy, you can see the below example:

And using Hubble to observe incomming traffic via Ingress, we can see traffic that is not permitted by the policy is now denied.

Networking

BGP Features and Security Enhancements

Integrating Kubernetes clusters with the rest of the network is best done using the Border Gateway Protocol (BGP). BGP support has been enhanced over multiple releases since its initial introduction in Cilium 1.10, including IPv6 support in Cilium 1.12 and BGP Graceful Restart in Cilium 1.14.

Cilium 1.15 introduces support for a much requested feature (MD5-based password authentication), additional traffic engineering features such as support for BGP LocalPreference and BGP Communities and better operational tooling to monitor and manage your BGP sessions.

Before we dive into each new feature, here is a reminder of how you can learn about Cilium BGP.

Learning BGP on Cilium

To learn how and why to use Cilium BGP, you can take our two BGP labs, which have all been updated to Cilium 1.15. As with the rest of the Isovalent Cilium labs, these labs are free, online and hands-on.

BGP Lab

Cilium offers native support for BGP, exposing Kubernetes to the outside and all the while simplifying users’ deployments.

Start BGPLabAdvanced BGP Features Lab

In this lab, learn about advanced BGP features such as: BGP Timers Customization, eBGP Multihop, BGP Graceful Restart, BGP MD5 and Communities Support

Start Advanced BGP Features labTo learn more about running BGP and Kubernetes, you can ready Raymond’s blog post:

BGP Session Authentication with MD5

A long-awaited feature request, MD5-based authentication lets us protect our BGP sessions from spoofing attacks and nefarious agents. Once authentication is enabled, all segments sent on the BGP’s TCP connections will be protected against spoofing by using a 16-byte MD5 digest produced by applying the MD5 algorithm to TCP parameters and a chosen password, known by both BGP peers.

Upon receiving a signed segment, the receiver must validate it by calculating its own digest from the same data (using its own password) and comparing the two digest results. A failing comparison will result in the segment being dropped.

Cilium’s BGP session authentication is very easy to use. First, create a Kubernetes Secret with the password of your choice:

Specify the Kubernetes Secret name in your BGP peering policy (see the authSecretRef option below) and the session will be authenticated as soon as your remote peer has a matching password configured. Note that the sample BGP peering policy highlights many of the new features that have been added in recent Cilium releases.

A look on Termshark will show you that an MD5 digest is now attached to every TCP transaction on your BGP session.

BGP MD5 is one of the many Cilium 1.15 features developed by external contributors (thank you David Leadbeater for this PR).

BGP Communities Support

BGP Communities – first defined in the RFC 1997 – are used to tag routes advertised to other BGP peers. They are essentially routing and policy metadata and are primarily used for traffic engineering.

In the demo below, when we log onto the BGP router that peers with Cilium, we can see an IPv4 prefix learned from Cilium – that’s the range used by our Pods in the Kubernetes cluster.

When we add the community 65001:100 to our BGP peering policy and re-apply it, we can see that Cilium has added the community to the BGP route advertisement.

Communities can be used to tell our neighbors we don’t want this prefix to be sent to other iBGP or eBGP neighbors for example.

BGP Support for Multi-Pool IPAM

Cilium 1.14 introduced a new IP Address Management (IPAM) feature for users with more complex requirements on how IP addresses are assigned to pods. With Multi-Pool IPAM, Cilium users can allocate Pod CIDRs from multiple different IPAM pools. Based on a specific annotation or namespace, pods can receive IP addresses from different pools (defined as CiliumPodIPPools), even if they are on the same node.

In Cilium 1.15, you can now advertise the CiliumPodIPPool to your BGP neighbors, using regular expressions or labels to only advertise the pools of your choice. For example, this peering policy would only advertise the IP Pool with the color:green label on.

Thanks to Daneyon Hansen for contributing this feature!

BGP Operational Tooling

With users leveraging BGP on Cilium to connect their clusters to the rest of their network, they need the right tools to manage the complex routing relationships and understand which routes are advertised by Cilium. To facilitate operating BGP on Cilium, Cilium 1.15 is introducing some CLI commands that you can use to show all peering relationships and routes advertised.

You can run these commands from the CLI of the Cilium agent (which has been renamed to cilium-dbg in Cilium 1.15 to distinguish it from the Cilium CLI binary) or using the latest version of the Cilium CLI.

Here are a couple of sample outputs from the Cilium agent CLI. First, let’s see all IPv4 BGP routes advertised to our peers (note that 10.244.0.0/24 is the Pod CIDR):

Let’s verify that the peering relationship with our remote device is healthy:

Day 2 Operations and Scale

Cluster Mesh Twofold Scale Increase

Ever wondered just how far you can scale Cilium? There’s some crazy numbers reported in the community of in production cluster sizes. Until Cilium 1.15, when using Cilium Cluster Mesh, which provides the ability to span applications and services across multiple Kubernetes clusters, regardless of which cloud, region or location they are based, you could scale to 255 clusters.

Running an environment this large has its design considerations and limitations. KVStoreMesh, was introduced in Cilium 1.14 to address some of these issues. This enhancement caches the information obtained from the remote clusters in a local kvstore (such as etcd), to which all local Cilium agents connect.

Cilium 1.15 continues to provide further scalability of your Kubernetes platform, now supporting up to 511 clusters. This can be configured in either Cilium config or by using Helm and the max-connected-clusters flag. At a technical level, Cilium identities in the datapath are represented as 24bit unsigned ints: this includes 16bits (65,535) for a cluster-local identity, and 8 bits (255) for the ClusterMesh ClusterID. The new max-connected-clusters flag allows a user to configure how the 24 identity bits are allocated. This means scaling to the max supported clusters does limit the maximum cluster-local identities to 32767. Note the max-connected-clusters flag can only be used on new clusters.

Interested in learning more about running Cilium at Scale? At KubeCon and CiliumCon North America, Isovalent’s own Ryan Drew and Marcel Zięba hosted deep dives into this area! We hope you enjoy their sessions posted below.

- Why KVStoreMesh? Lessons Learned from Scale Testing Cluster Mesh with 50k Nodes Across… – Ryan Drew

- Scaling Kubernetes Networking to 1k, 5k, … 100k Nodes!? – Marcel Zięba & Dorde Lapcevic

Cilium Agent health check observability enhancements

The data provided by the Cilium Agent has been improved in 1.15 to include a Health Check Observability feature. This enhancement provides an overview of sub-systems and their state inside of the Cilium Agent, providing an easier method to diagnose health degradations in the Agent. The output is shown as a tree view, as per the example below, using the cilium-dbg status --verbose command inside the Cilium Agent pod.

Below is an example output of the newly implemented Modules Health section of the verbose status output.

With the implementation of this feature, platform owners have a further method to troubleshoot error and failures of Cilium, for example, in the case of CI testing, where tests can be further enhanced with checks that ensure there are no errors or degreations reported in the Modules Health for the CI tests to pass.

Infrastructure As Code Cilium support with Terraform/OpenTofu & Pulumi

Most operators use a GitOps approach to install Cilium – using tools such as ArgoCD, Helm, Flux etc… In the broader cloud infrastructure space, Terraform and its open source fork OpenTofu remain one of the most popular Infrastructure-As-Code tools. With the recent release of a Terraform/OpenTofu provider for Cilium, you can now deploy Cilium with a terraform apply or an tofu apply depending on your personal preference.

Here is a demo with OpenTofu where we first deploy a cluster using the kind provider before deploying Cilium once the cluster is deployed:

If you’d rather use a common programming language like Go or Python to deploy Cilium instead of a domain specific language like HashiCorp’s HCL, you can also use Pulumi – the Pulumi Cilium provider is now available.

Kubernetes 1.28 and 1.29 Support

It might go unnoticed in most releases but we thought it was worth mentioning; given all the efforts in validating Cilium’s support with a matrix of Kubernetes versions. The latest Kubernetes releases 1.28 and 1.29 are now supported with Cilium 1.15.

For more information about compatibility with Kubernetes, check out the Cilium docs. In this brief demo below, you can see how the Kubernetes nodes where Cilium are running are using Kubernetes 1.29.

Hubble & Observability

Hubble has recieved some really awesome updates in the Cilium 1.15 release, and we love talking to customers about the obversability capabilities that Cilium brings out of the box!

If you are just getting started with Hubble, then check out our cheat sheet, which will help you get started with the Hubble CLI tool.

New Out-Of-The-Box Grafana Dashboards

We re-introduced Hubble to you last year – we hope you now feel reacquainted with the Kubernetes network troubleshooting tool, with its Wireshark-like capabilities for the cloud native platforms.

Cilium 1.15 includes two new Grafana dashboards that use the network flow information from Cilium and Hubble. You can see these two dashboards in action in the KubeCon 2023 session linked below.

The first dashboard is the Network Overview, providing you a quick look of the traffic load through your platform. Covering flows processed, connection drops, and network policy drops, making it easy to spot when the load in your system may have become unstable.

The second dashboard is the DNS Overview visualisation. As we all know, DNS is a critical function to a healthy and optimal platform. With the layer 7 visibility capabilities of Hubble, you can use this dashboard to spot issues with DNS requests and pinpoint application failures.

You can dive further into the Cilium, Hubble and Grafana integrations in the Cilium Hubble Series (Part 3): Hubble and Grafana Better Together blog post, and read about how our Technical Marketing Engineer, Dean Lewis, found a DNS issue in his home network using Hubble!

The provided out-of-the-box Cilium and Hubble dashboards can be automatically imported by Grafana based on the label and value. To make it simple and easy to get started, when installing or upgrading Cilium, you can use the below values to import the dashboards to a Grafana instance, in this example, running in the namespace called “monitoring”.

To learn more about leveraging Hubble’s data in Grafana, check out Anna’s demo:

Correlate traffic flows to a Network Policy

A highly requested feature to enhance the data provided in Hubble network flows, was to include the network policy which admits the traffic flow. In Cilium 1.15, two new fields of metadata have been added to the Hubble network flow data, ingress_allowed_by and egress_allowed_by which correlate the permitted traffic flows to the known Cilium Network Policy.

These additional fields of metadata will provide more visibility to help understand and troubleshoot new and existing Cilium Network Policies that are applied to a cluster, helping the network and application engineers know if they are having the intended effect on application communications.

Hubble Flow Exporter

The Hubble Exporter is a new feature of cilium-agent, implemented by a community contributor, Aleksander Mistewicz, that provides the ability to write Hubble flows to a file for later consumption as logs.

There are two exporter configurations available:

- Static – this accepts only one set of filters and requires cilium pod restart to change the config

- Dynamic – this allows the configuring of multiple filters at the same time and saving output in separate files. Additionally it does not require cilium pod restarts to apply changed configuration.

For further control of the data exported, the Hubble Exporter supports the configuration of file rotation, size limits, number of exports retained, and field masks. Whilst the first few configuration options control the overall files themselves, the field mask options provide a granular configuration of the data to be exported into the files. For example, you can capture all logs pertaining to a particular verdict, such as DROPPED or ERROR. You can also filter the flows to capture only flows from a specific namespace, or pod, and whether that selection applies to source or destination or both!

Let’s look at an example configuration of a dynamic Hubble Flow Exporter. The below configuration creates a config map named cilium-flowlog-config that will store the configuration of the dynamic flow filters.

This configuration provides the following:

- Enables the Dynamic Exporter and creates two exporter files:

- Exporter Config 1

- Captures all network flows relating to traffic originating from the

tenant-jobsnamespace and saves this to a file path/var/run/cilium/hubble/tenant-jobs.log

- Captures all network flows relating to traffic originating from the

- Exporter Config 2

- Captures all network flows to and from any pod with the label

k8s-app=kube-dns, a field mask is used to specify which fields from the flow JSON output is captured, including the Summary field which will provide us the DNS FQDN requested and network address that was provided as a response. Saves this to the file path/var/run/cilium/hubble/coredns.log.

- Captures all network flows to and from any pod with the label

This type of configuration is really great for troubleshooting, especially when troubleshooting DNS where you can use Hubble to capture the DNS request and Responses!

Below is an example of the data captured from CoreDNS using these filters.

There are additional configurations for filters available, and settings such as providing an end timeframe so that you are not overloading your system and storage capturing all output forever, again another great example for troubleshooting use cases.

You can read up on the full configuration of this new feature in the official Cilium documentation.

New Hubble CLI filters

As part of the Cilium 1.15 release, Hubble CLI 0.13.0 is now released, which implements several new filters, making it easier to interrogate Kubernetes network flow data.

- Filter flows for pods and services across all namespaces

- Sometimes you have services or pods that span namespaces, for example when Blue/Green testing, these new filters allow you to easily filter flows for specific pods and service names that exist across multiple namespaces.

-A, --all-namespaces– Show all flows in any Kubernetes namespace.--from-all-namespaces– Show flows originating in any Kubernetes namespace.--to-all-namespaces– Show flows terminating in any Kubernetes namespace.

- Sometimes you have services or pods that span namespaces, for example when Blue/Green testing, these new filters allow you to easily filter flows for specific pods and service names that exist across multiple namespaces.

- Improvements allow the use of both

--namespaceand--podflag- A small improvement that just makes sense! Previously when you wanted to specify a pod in a specific namespace, you needed to specify

--pod {namespace}/{pod_name}and couldn’t combine it with the--namespaceflag. Now that niggle has been fixed.

- A small improvement that just makes sense! Previously when you wanted to specify a pod in a specific namespace, you needed to specify

- Filter flows based on HTTP header

- Previously when troubleshooting HTTP Requests, if you needed to interrogate flows based on HTTP Header, you would need to output the flows into JSON and into a tool such as jq. Now that complexity has been removed you can filter using

hubble observe.--http-header– Show only flows which match this HTTP header key:value pairs (e.g. “foo:bar”)

- Previously when troubleshooting HTTP Requests, if you needed to interrogate flows based on HTTP Header, you would need to output the flows into JSON and into a tool such as jq. Now that complexity has been removed you can filter using

- Filter Layer 7 flows based on HTTP URL

- Another fantastic enhancement, bringing the ability to filter Layer 7 Hubble flows based on URL using regex.

--http-url– Show only flows which match this HTTP URL regular expressions (e.g. “http://.*cilium.io/page/\d+”)

- Another fantastic enhancement, bringing the ability to filter Layer 7 Hubble flows based on URL using regex.

The below video takes you quickly through these new features in just over 60 seconds!

Hubble Redact

Hubble gives you deep visibility into the network flows across your cloud native platform, and can inspect and show you meaningful data between the transactions between services, such as URL parameters.

Sometimes this data can be classified as sensitive, or even potentially unnecessary when storing the network flows. In Cilium 1.15, the Hubble Redact features, implemented by two community contributors, Chris Mark and Ioannis Androulidakis, provide the ability to sanitize sensitive information from Layer 7 data flows captured by Hubble. This covers HTTP URL queries, HTTP headers, HTTP URL user info, and Kafka API keys.

Enabling the Hubble redaction feature is quick and easy using Helm or Cilium Config.

We’ve recorded a few quick videos to show you the Hubble redact feature in action!

- Redact Kafta API Keys

- Redact UserInfo in HTTP request

- Redact HTTP Headers

Looking Ahead

Cilium 1.15 is the first release since the major milestone of CNCF graduation, and here at Isovalent we’re proud of all the contributions we’ve made, alongside the rest of the Cilium community, to bring the project to where it is today.

With each passing year, as the community continues to grow and deepen its support for the project, it instills a profound sense of confidence in more and more end user organizations who choose to adopt and integrate it into their infrastructure.

Graduation isn’t the end; rather, it serves as a validation of the breakthroughs Cilium will continue to pioneer in the years to come.

The Isovalent team is excited to continue playing our leading role in the Cilium project, as we embark on the adventure of joining the Cisco family.

Getting Started

To get started with Cilium, use one of the resources below:

- Join the Cilium 1.15 eBPF and Cilium eCHO show (Part 1 and Part 2)

- Learn more about Cilium (Introduction, tutorials, AMAs, …)

- Get involved in the Cilium community

- Join Cilium Slack to interact with the Cilium community

Previous Releases

- Cilium 1.14 – Effortless Mutual Authentication, Service Mesh, Networking Beyond Kubernetes, High-Scale Multi-Cluster, and Much More

- Cilium 1.13: Gateway API, mTLS datapath, Service Mesh, BIG TCP, SBOM…

- Cilium 1.12: Ingress, Multi-Cluster, Service Mesh, External Workloads, and much more

- Cilium 1.11: Service Mesh Beta, Topology Aware Routing, OpenTelemetry, …

- Cilium 1.10: WireGuard, BGP Support, Egress IP Gateway, New Cilium CLI, XDP Load Balancer, Alibaba Cloud Integration and more

- Cilium 1.9: Maglev, Deny Policies, VM Support, OpenShift, Hubble mTLS, Bandwidth Manager, eBPF Node-Local Redirect, Datapath Optimizations, and more