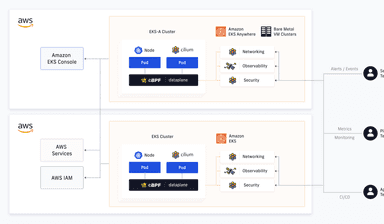

How to Deploy Cilium and Egress Gateway in Elastic Kubernetes Service (EKS)

Kubernetes changes the way we think about networking. In an ideal Kubernetes world, the network would be flat, and the Pod network would control all routing and security between the applications using Network Policies. In many Enterprise environments, though, the applications hosted on Kubernetes need to communicate with workloads outside the Kubernetes cluster, subject to connectivity constraints and security enforcement. Because of the nature of these networks, traditional firewalling usually relies on static IP addresses (or at least IP ranges). This can make it difficult to integrate a Kubernetes cluster, which has a varying —and at times dynamic— number of nodes into such a network. Cilium’s Egress Gateway feature changes this by allowing you to specify which nodes should be used by a pod to reach the outside world. This blog will walk you through deploying Cilium and Egress Gateway in Elastic Kubernetes Service (EKS).

What is an Egress Gateway?

The Egress Gateway feature was first introduced in Cilium 1.10.

Egress Gateway allows one to specify a single Egress IP to go through when a pod reaches out to one or more CIDRs outside the cluster.

Note- The blog caters to the 1.14 release of Isovalent Enterprise for Cilium. If you are on the open-source version of Cilium and would like to migrate/upgrade to Isovalent Enterprise for Cilium, you can contact sales@isovalent.com.

For example, with the following resource:

All pods that match the app=test-app label will be routed through the 10.1.2.1 IP when they reach out to any address in the 1.2.3.0/24 IP range —which is outside the cluster.

How can you achieve High Availability for an Egress Gateway?

While Egress Gateway in Cilium is a great step forward, most enterprise environments should not rely on a single point of failure for network routing. For this reason, Cilium Enterprise 1.11 introduced Egress Gateway High Availability (HA), which supports multiple egress nodes. The nodes acting as egress gateways will then load-balance traffic in a round-robin fashion and provide fallback nodes in case one or more egress nodes fail.

The multiple egress nodes can be configured using an egressGroups parameter in the IsovalentEgressGatewayPolicy resource specification:

In this example, all pods matching the app=test-app label and reach out to the 1.2.3.0/24 IP range will be routed through a group of two cluster nodes bearing the egress-group=egress-group-1 label, using the first IP address associated with the eth1 network interface of these nodes.

Note that it is also possible to specify an egress IP address instead of an interface.

Requirements

Cilium must use network-facing interfaces and IP addresses on the designated gateway nodes to send traffic to Egress nodes. These interfaces and IP addresses must be provisioned and configured by the operator based on their networking environment. The process is highly dependent on said networking environment. For example, in AWS/EKS, and depending on the requirements, this may mean creating one or more Elastic Network Interfaces with one or more IP addresses and attaching them to instances that serve as gateway nodes so that AWS can adequately route traffic flowing from and to the instances. Other cloud providers have similar networking requirements and constructs.

Enabling the egress gateway feature requires enabling both (BPF) masquerading and the kube-proxy replacement in the Cilium configuration.

High Availability and Health Checks

The operator must specify multiple gateway nodes in a policy to achieve a highly available configuration. In a scenario where multiple gateway nodes are specified, and whenever a given node is detected as unhealthy, Cilium will remove it from the pool of gateway nodes so traffic stops being forwarded to it. The period used for the health checks can be configured in the Cilium Helm values:

HA Egress Gateway walk-through

Setup

For the following feature example walk-through, we set up a monitoring system using Cilium Enterprise 1.10 on top of an AWS EKS cluster.

We are using two containerized apps to monitor traffic:

- An HTTP echo server that returns the caller’s IP address (similar to ifconfig.me/ip), deployed on an EC2 instance outside of the Kubernetes cluster;

- A monitoring server sends HTTP requests to the echo server once every 50ms and provides Prometheus metrics on the requests, such as the HTTP load time, the number of HTTP errors, or whether the returned IP is one of the known egress nodes IPs.

The example walk-through will be running on the Oregon (eu-west-2) AWS region, which features four availability zones labeled eu-west-2a, eu-west-2b, eu-west-2c, and eu-west-2d.

For a proper HA setup, and since our EKS cluster is spread on all four zones, we set up one egress node per zone. Each node has one extra Elastic Network Interface attached to it, with an IP in the VPC’s subnet. Each of these IPs is thus specific to an availability zone:

10.2.200.10foreu-west-2a;10.2.201.10foreu-west-2b;10.2.202.10foreu-west-2c;10.2.203.10foreu-west-2d.

The nodes have a user data script to retrieve and mount the IP assigned to their zone.

Note that this setup is specific to AWS. On other platforms, a different method would have to be used to assign IPs to the Egress nodes. Prometheus then scraped the monitoring server, and we built a Grafana dashboard based on the metrics.

This dashboard displays:

- The stacked number of requests per second (about 20req/s in total, with a 50ms delay between requests) per outbound IP;

- The load times per outbound IP;

- The % of HTTP errors in total requests.

Note that the graphs display rates over 1 minute, so they do not allow for the measure of unavailability windows reliably. When calculating unavailability in the example walk-through, we relied on the monitor container logs instead.

Adding an Egress Gateway Policy

To demonstrate the HA Egress Gateway feature, we are going to apply the following Cilium Egress Policy to the cluster:

The podSelector is still very similar to the previous example. The egressGroups parameter is a bit different, though.

With this policy in place, the pods with the app.kubernetes.io/name=egress-gw-monitor label in the cilium-egress-gateway namespace will go through one of the egress nodes when reaching out to any IP in the 10.2.0.0/16 IP range, which includes our echo server instance.

Let’s see what happens when adding the Cilium Egress Policy to the cluster.

Before applying the policy, all traffic reached the echo server directly using the pod’s IP (10.2.3.165, in red) since our cluster uses AWS ENI with direct routing. After applying it, traffic to the echo server goes through one of the 4 elastic IPs set up on the egress nodes in a round-robin fashion.

Load times depend on the number of hops and distance. Direct connections (red) are faster, followed by connections through the eu-west-2d instance (where the monitor pod is located) and eu-west-2a, where the echo server is located, and finally, the two other zones, which require a hop through a third availability zone.

How good is the resiliency with Egress Gateway(s)?

Now is the time to test the resiliency of the HA setup by rebooting one or more egress instances.

When rebooting a single egress node (in eu-west-2d in this case), a few packets are lost, but the load is quickly re-balanced on the 3 remaining nodes. In our tests using a 1-second health check timeout setting, we recorded timeouts for 3 seconds when the node came down.

Traffic comes back to normal without losses after the node is back up.

When rebooting three (out of four) egress nodes simultaneously, the timeout period was 3 seconds if all nodes came down simultaneously and up to 6 seconds when they went down in multiple batches. After that, all traffic was re-balanced on the last node until the nodes returned. The same happens if we terminate the nodes and let AWS autoscaling spawn up new nodes (though the nodes take longer to come back up).

Conclusion

Hopefully, this post gave you a good overview of deploying Cilium and Egress Gateway in Elastic Kubernetes Service (EKS). If you have any feedback on the solution, please share it with us. Talk to us, and let’s see how Cilium can help with your use case.

Try it out

Start with the Egress Gateway lab and explore Egress Gateway in action.

Further Reading

To dive deeper into the topic of Egress Gateway, check out these two videos:

Raphaël is a Senior Technical Marketing Engineer with Cloud Native networking and security specialists Isovalent, creators of the Cilium eBPF-based networking project. He works on Cilium, Hubble & Tetragon and the future of Cloud-Native networking & security using eBPF.

An early adept of the DevOps principle, he has been a practitioner of Configuration Management and Agile principles in Operations for many years, with a special involvement in the Puppet and Terraform communities over the years.